The AI-fuelled memory supply crisis has been difficult to escape—after all, even those literally living under a rock still need RAM for the glowing RGB rig they’re keeping under there.

But it’s important to note AI datacentres aren’t gobbling up consumer-grade kit specifically. Some sources suggest major players have bought up close to 40% of wafer production (i.e., not fully-finished chips). Others are specifically buying up High Bandwidth Memory (HBM), which is specifically built for the demands of an AI datacentre—and Samsung may soon be raking it in thanks to its “industry-first commercial HBM4.”

Samsung’s asking price for this oh-so-fresh hardware? About $700. As Yonhap News points out, that’s a 20–30% price increase over the last generation HBM3E (via Jukan).

Samsung can get away with charging this eye-watering price tag for a number of reasons. An industry insider told Yonhap News, “With commodity DRAM profitability now exceeding that of HBM, Samsung no longer has a reason to maximize HBM4 volumes at the expense of its commodity DRAM lines.

“Having proven its HBM competitiveness recovery through best-in-class performance and industry-first mass production shipments, Samsung is now reportedly adjusting capacity carefully from a profitability standpoint.”

So, in other words, Samsung knows both DRAM in general and HBM4 are in high demand—but it’s not going to make oodles more to meet that demand. This makes sense beyond just profitability; should the market turn, Samsung won’t be left holding a whole load of expensive HBM it can no longer sell or production facilities it no longer needs.

Currently, industry sources suggest that only Nvidia has requested HBM4 so far this year. Other heavy hitters, like Google, are apparently still focused on securing HBM3E for its AI acceleration plans. With a price tag like that, who could blame them?

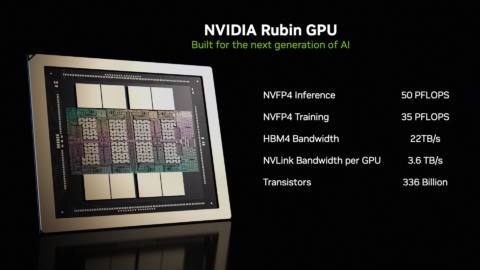

There’s a strong possibility that Nvidia is currently preparing to unveil AI accelerators kitted out with Samsung’s shiniest HBM during the GTC 2026 conference in March. Whether that includes Nvidia’s Vera Rubin, the six-trillion transistor ‘superchip’ for AI, remains to be seen.

But while we’re on the subject, rumours claim Rubin will be first in line to receive TSMC’s ultra-advanced A16 chip node. As this year’s GTC is slated to begin in San Jose on March 16, we won’t have to wait too much longer to find out.

And as for us gamers, HBM isn’t really our bag. AMD did try it with the RX Vega cards once upon a time, but that generation didn’t go down well with the gaming masses. We’ll stick to our very limited supply of GDDR, thanks.