Clawdbot—sorry, Moltbot—is everywhere right now, assuming your algorithms are vaguely tech-adjacent. It’s an AI bot that claims to be able to do stuff. Lots of stuff. Of course, alongside such extravagant promises are a whole host of potential security and privacy concerns.

According to its website, which can still be found at clawd.bot as well as molt.bot—Claude-owner Anthropic forced the AI bot to change its name because of trademark issues—it says that it’s “the AI that actually does things: clears your inbox, sends emails, manages your calendar, checks you in for flights. All from WhatsApp, Telegram, or any chat app you already use.”

🦞 BIG NEWS: We’ve molted!Clawdbot → MoltbotClawd → MoltySame lobster soul, new shell. Anthropic asked us to change our name (trademark stuff), and honestly? “Molt” fits perfectly – it’s what lobsters do to grow.New handle: @moltbotSame mission: AI that actually does…January 27, 2026

In fact, it’s generated so much hype right now that Cloudflare recently saw its stocks shoot up as a result, because its CDNs could help bolster the kinds of fast connections needed for Moltbot to function well. Stocks have since started to dip again, though.

So, what’s all the fuss about? Well, it’s such a big deal because you can use it to, erm, remotely play YouTube videos, I guess?

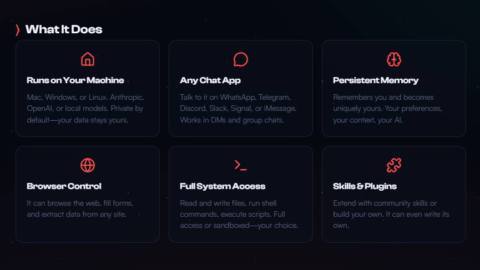

At least, that seems to be the way that many who are dipping their toes into the AI sphere are talking about it. Really, though, the idea is much more than that. The bot is essentially meant to act as a middleman between all of your different apps/accounts and your AI chatbot subscriptions—or at least as many apps and accounts you give it access to.

The end result is that you should be able speak to Moltbot via your usual messaging apps, telling it what to do, and it can go and do these things in the background as long as you’ve linked it up with all the apps and services it might need to get the job done. It’s also supposed to have leeway to be proactive in what it does to help you.

Part of what seems so appealing about it, at least for me, is that Moltbot itself runs locally, on whatever device you want. Or a cloud server of your choice if you choose to go down that route. It sits on a machine of your choosing and stores all its ‘memory’ persistently on there as Markdown, which initially sounds great if, like me, you’re interested in having control over your data.

In some ways it seems true that it does give you more control over this data. You can control everything about the bot locally, or through remote connection, and version control it through Git, which is great for someone like me who loves apps like Obsidian. On the other hand, because it’s essentially an intermediary between your apps and other AI model subscriptions, the actual brainpower that the AI is using is still non-local.

Essentially, the way this works is you follow a command-line setup to get it installed on your device, and you then have to tinker around copying tokens from all your different AI subscriptions, as well as the apps and services you want the bot to be able to interface with, and give them to the bot through its Control UI. You have your ‘Gateway’, which is the device that houses Moltbot, and its Control UI, which you jump onto to manage all these app connections and so on.

But once it’s all set up, you can interact with it through your usual messaging apps like WhatsApp or Discord.

Of course, you could use this to turn on YouTube videos remotely, but that would be missing the point. The best I’ve seen an actual use case put across is by SaaS-maker Alex Finn talking to entrepreneur Greg Isenberg:

“You are going to have an AI employee that’s tracking trends for you, building you product, delivering you news, creating you content, running your whole business … You’re going to be running a business by yourself with AI employees … It’s for people who want to actually improve their life, get more productivity, and not just kind of have a Tamagotchi toy.”

“I talked about the fact that I’m buying a Mac Studio to run it on in the next couple of weeks, and so it started going and it started looking at different ways to run local models on a Mac Studio, overnight, while I was sleeping, without me asking, and it created an entire report for that.”

In other words, you can treat it like an actual employee, discuss your goals and so on, and set it up in a way as to be proactive and suggest ideas and do research for you, then brief you on what it’s done. Moltbot even took the initiative to code a new feature for his software based on a new trend that it spotted on X.

Naturally, this could all add up to a lot of AI ‘brainpower’ that you’re paying for, ie, a lot of tokens, as this guy found out:

Finn argues that this is something that needs to be considered and accounted for when you set it up. Apparently there are ways to limit what Moltbot uses its tokens for, but I reckon I’d be a little worried each night as I went to bed that I would wake up to a big bill.

Of course, for Finn, these costs are slim anyway considering he envisions such AI bots acting as actual employees; it’s much less than a salary.

Finn also recommends being careful with what you give Moltbot access to, not giving it access to anything of critical importance. This is in response to concerns—very reasonable ones, in my opinion—over the security and privacy threats Moltbot raises.

Security risks

Let’s start with the possible straight-up hacking scenario. Security researcher and hacker Jamieson O’Reilly detailed in a lengthy X article how you can use web traffic scrapers such as Shodan or Censys to spot vulnerable Moltbot Control UIs. Hundreds of publicly visible Moltbot Controls showed up on these services, and a small portion of these “ranged from misconfigured to completely exposed.”

https://t.co/W75HavRqBlJanuary 25, 2026

Some have pushed back against scaremongering over this particular issue, though. Cybersecurity YouTuber Low Level, for instance, points out that the vast majority of those hundreds of visible Moltbot instances that were visible couldn’t actually be hacked, but were simply visible.

From my perspective, such configuration missteps in themselves don’t point at a problem with Moltbot, as it’s down to each user to ensure they’ve configured things correctly. But we’ll return to that shortly.

The bigger issue, according to Low Level, is prompt injection. LLMs don’t distinguish very clearly between a user command and just any old data that it feeds; that’s just the nature of probabilistic machine learning models. As such, there’s a chance that data from elsewhere might be used to “inject” commands to trick the AI into doing something you never wanted it to do.

This kind of thing is a known issue with AI. In fact, researchers have shown how Gemini can be used to inject prompts into calendar invites to leak Google Calendar info (via Mashable). And Low Level says his producer’s wife managed to trick her husband’s Moltbot into thinking she was him by sending him an email, and got it to play Spotify on his Gateway computer. I don’t know how much I’d be giving AI the reins for, just yet, given such issues.

What is artificial general intelligence?: We dive into the lingo of AI and what the terms actually mean.

To me, the real problem is that, in going viral, Moltbot is being touted by so many as the next big thing for beginners. But as the number of potential security issues as well as the level of awareness, restraint, and technical ability to prevent these issues increases, so too, I think, should the caution with which we recommend it to anyone.

Not to toot my own horn, but I’m quite techy myself, although I haven’t dived too much into the AI sphere yet, and I’m hesitant to try out Moltbot for this very reason. If I can’t make that choice for myself then I certainly can’t recommend it to others, unless they’re well-versed in all things AI, networking, and cybersecurity. That’s why it’s kind of frustrating that so much content surrounding Moltbot right now is touting it as something fairly beginner-friendly that can make you tons of money.

Saying that, though, I can’t deny how impressive it seems to be, if we move beyond the simpler use cases. It’s a bit of a mask-off moment for me, to see just what AI is now capable of when given free rein. I just wonder whether those security concerns will be ironed out in the years to come—whether it’s ever truly possible to eradicate prompt injection—and whether the number of tokens required for it to be useful will make it useful for anyone other than content creators and other ‘solopreneur’ types.